The second column for all rows should be 1,000, but is wrong for both 1e18 and 1e27.

A "floating point number" is typically represented on a computer as 32 ("float") or 64 ("double") bits. There are usually three parts to a floating point number, the sign, the exponent and the mantissa. This is equivalent to scientific notation that you learned in school. Usually a float will have 8 bits for the exponent and 23 for the mantissa, while a double will usually have 11 and 52 respectively. Both have one bit for the sign.

For our purposes, we will simplify this to just three bits for the exponent and four bits for the mantissa. So the exponent can range from -4 to 3 (because it is considered a signed number) and the mantissa can range from 0 to 15 (unsigned).

A floating point number is now 2exponent * (mantissa/16), and negative if the sign bit is on. Here are some sample possible values (there are a total of 256 in our example). Click here to see the whole list.

| Sign Bit | Exponent (signed) | Mantissa (unsigned) | Value |

| 0 | 0 | 0 | 0.0000 |

| 0 | 0 | 1 | 0.0625 |

| 0 | 0 | 2 | 0.1250 |

| 0 | 0 | 3 | 0.1875 |

| 0 | 0 | 4 | 0.2500 |

| Sign Bit | Exponent (signed) | Mantissa (unsigned) | Value |

| 0 | 2 | 0 | 0.0000 |

| 0 | 2 | 1 | 0.2500 |

| 0 | 2 | 2 | 0.5000 |

| 0 | 2 | 3 | 0.7500 |

| 0 | 2 | 4 | 1.0000 |

| Sign Bit | Exponent (signed) | Mantissa (unsigned) | Value (approx.) |

| 1 | -1 | 0 | 0.0000 |

| 1 | -1 | 1 | -0.0313 |

| 1 | -1 | 2 | -0.0625 |

| 1 | -1 | 3 | -0.0938 |

| 1 | -1 | 4 | -0.1250 |

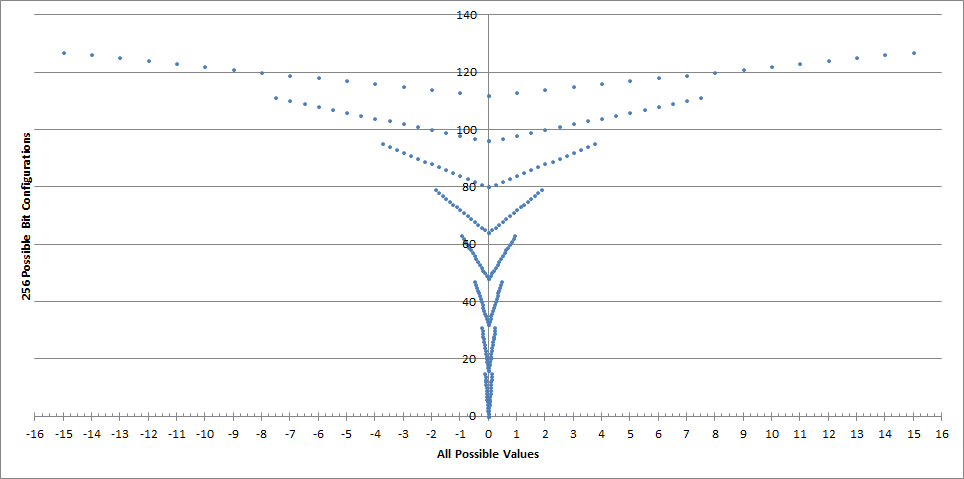

For our simplified example, there are only 256 possible floating point numbers (because there are only 8 bits total, and 28 is 256). The actual possible values look like the following graph.

Note that as the numbers get larger, the gaps between the numbers get larger as well. In our example, even with floating point notation, it is not possible to store any numbers between 14.0 and 15.0. Between 5.0 and 6.0 the only choice is 5.5. As you get closer to 0.0, there are more possible values.

The crux of the matter is that if you add a very small number into a very large number, that smaller number will be discarded. There is no way to increase the number of bits in a floating point number, if you use this representation, which is very common. In our example above, we are using doubles, with 52 bits for the mantissa. 252 is about 4.5x1015. Using 1,000 as the smaller number means the maximum precision is about 1 part in 4.5x1018. Which is why it gets strange behavior with 1018 -- it rounded off the result to the nearest possible value. Compared to 1027, the value 1,000 is too small for addition or subtraction, it is virtually zero.

There are ways to do extended precision arithmetic, but they are generally much slower, and more complicated to set up.